Cache and Buffers

Cache and buffers in the context of Linux memory management are specific areas within RAM that the operating system uses for different purposes. Here's how they work in detail:

Cache in Linux

What it is:

Cache in Linux refers to a portion of RAM that is used to store frequently accessed data (e.g., disk blocks, files, or application data). The kernel dynamically manages this area to speed up future access to the same data.Purpose:

To reduce the need to fetch data repeatedly from slower storage devices (e.g., hard drives or SSDs), improving overall performance.How it Works:

When a file is read from disk, the kernel keeps a copy of the file's data in the page cache (a part of RAM).

If the same file or block is accessed again, the kernel serves it from the cache instead of reading it from the disk.

Dynamic Nature:

The size of the cache is not fixed—it grows and shrinks based on system demand. For example:If an application requests more memory, the kernel may shrink the cache to free up space.

When the system is idle, unused RAM is often repurposed as cache.

Types of Cache in RAM:

Page Cache: Stores file data.

dentry and inode Cache: Speeds up filesystem operations by caching directory entries and inodes.

Buffer in Linux

What it is:

Buffers in Linux are areas of RAM used to temporarily store data being transferred between the operating system and storage devices.Purpose:

To manage the differences in speed between fast processors and slower I/O devices (e.g., disks). Buffers ensure smooth data flow without making the CPU wait.How it Works:

For write operations, data is first written to the buffer in RAM. Later, the kernel flushes this data to the storage device in larger chunks, which is more efficient.

For read operations, the buffer holds data read from storage, ensuring the CPU has immediate access to it.

Dynamic Nature:

Like the cache, buffers are also dynamically allocated within RAM based on workload and system requirements.

Are Cache and Buffers Physically Separate?

No, cache and buffers are logical allocations within RAM. They are not special hardware components or reserved physical memory regions. Instead, the kernel uses parts of the available RAM dynamically for these purposes.

How Do Cache and Buffers Differ from Hardware Caches?

Cache in RAM vs. CPU Cache:

CPU Cache (e.g., L1, L2, L3) is a hardware feature built into the processor, designed to speed up access to frequently used instructions or data.

RAM Cache (Linux page cache) is managed by the operating system and is used for data fetched from storage devices.

Buffer in RAM vs. Device-Specific Buffers:

Many devices (e.g., hard drives, SSDs, and network cards) have their own hardware buffers to temporarily hold data during transfers.

Linux buffers in RAM work at a higher level, coordinating data flow between the operating system and hardware.

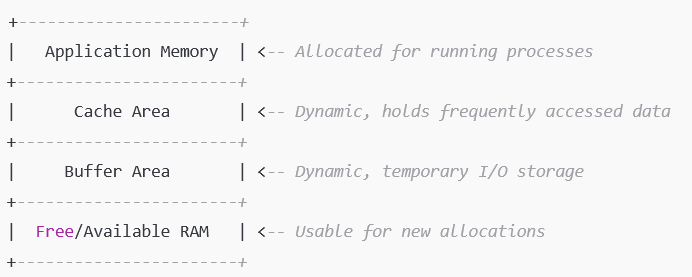

Visualizing Cache and Buffers in RAM

How Cache and Buffers are Managed by the Kernel

Dynamic Allocation:

Cache and buffer areas shrink or grow based on system demand. The kernel prioritizes applications over cache and buffers when memory is constrained.Reclaiming Memory:

When applications need more memory, the kernel can:Drop Cache: Remove stale or infrequently used cached data.

Flush Buffers: Write buffered data to storage devices to free up RAM.

Summary

Cache and buffers are logical areas in RAM used to improve I/O performance.

They are not physically separate hardware components but are dynamically allocated by the kernel.

Cache holds frequently accessed data, while buffers handle temporary I/O data transfers.

Their dynamic and transparent management by the Linux kernel ensures efficient memory usage and optimized system performance.